2 99% of Executives are Misled by AI Advice

As an executive, you’re bombarded with articles and advice on building AI products.

The problem is, a lot of this “advice” comes from other executives who rarely interact with the practitioners actually working with AI. This disconnect leads to misunderstandings, misconceptions, and wasted resources.

A Case Study in Misleading AI Advice

An example of this disconnect in action comes from an interview with Jake Heller, CEO of Casetext.

During the interview (clip linked above), Jake made a statement about AI testing that was widely shared:

One of the things we learned, is that after it passes 100 tests, the odds that it will pass a random distribution of 100k user inputs with 100% accuracy is very high. (emphasis added)

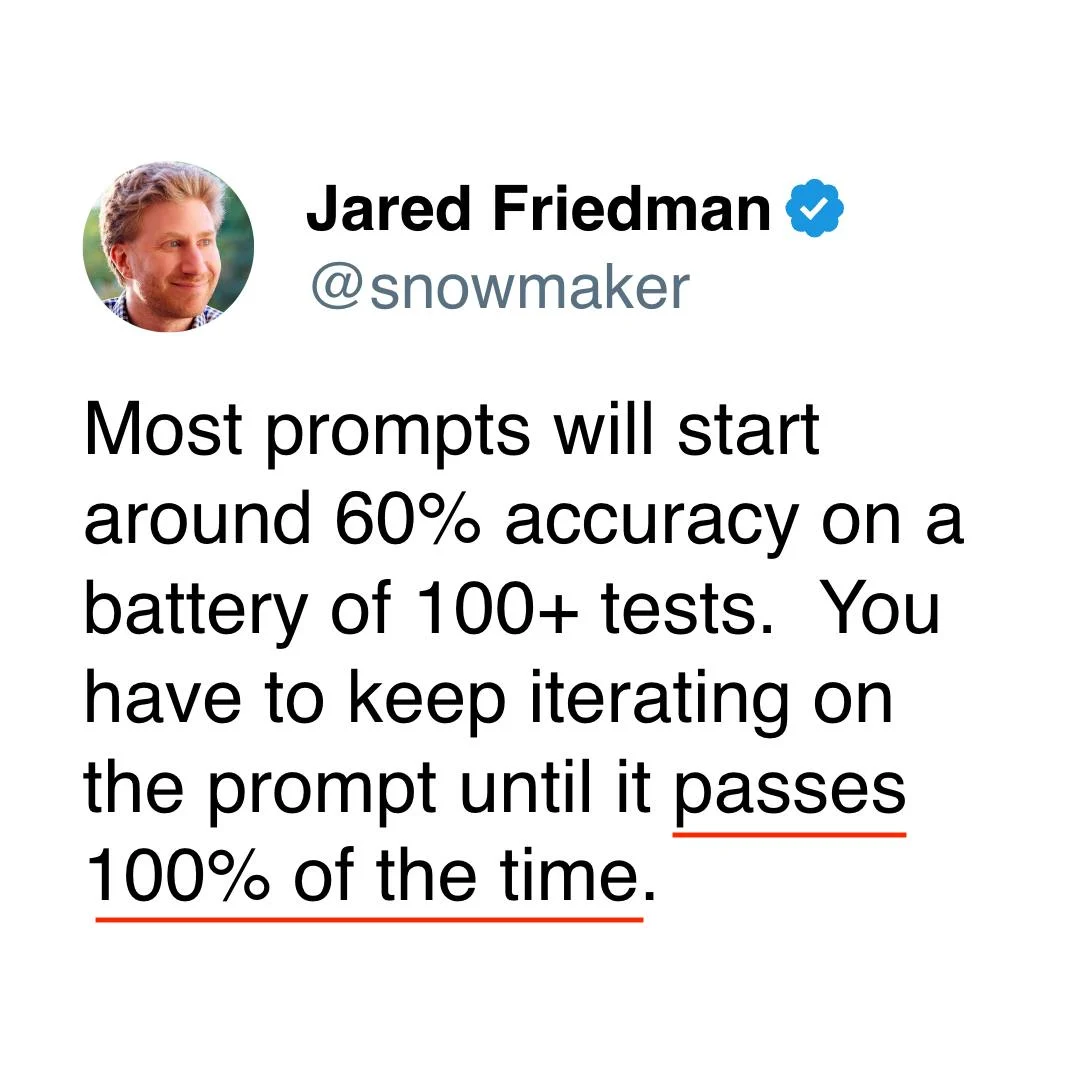

This claim was then amplified by influential figures like Jared Friedman and Garry Tan of Y Combinator, reaching countless founders and executives:

The morning after this advice was shared, I received numerous emails from founders asking if they should aim for 100% test pass rates.

If you’re not hands-on with AI, this advice might sound reasonable. But any practitioner would know it’s deeply flawed.

“Perfect” is Flawed

In AI, a perfect score is a red flag. This happens when a model has inadvertently been trained on data or prompts that are too similar to tests. Like giving a student the answers before an exam, they will look good on paper but be unlikely to perform well in the real world.

If you are sure your data is clean but you’re still getting 100% accuracy, chances are your test is too weak or not measuring what matters. Tests that always pass don’t help you improve; they’re just giving you a false sense of security.

Most importantly, when your models have perfect scores, you lose the ability to differentiate between them. You won’t be able to identify why one model is better than another, or strategize how to make further improvements.

The goal of evaluations isn’t to pat yourself on the back for a perfect score.

It’s to uncover areas for improvement and ensure your AI is truly solving the problems it’s meant to address. By focusing on real-world performance and continuous improvement, you’ll be much better positioned to create AI that delivers genuine value. Evals are a big topic, and we’ll dive into them more in a future email.

Moving Forward

When you’re not hands-on with AI, it’s hard to separate hype from reality. Here are some key takeaways to keep in mind:

- Be skeptical of advice or metrics that sound too good to be true.

- Focus on real-world performance and continuous improvement.

- Seek advice from experienced AI practitioners who can communicate effectively with executives (you’ve come to the right place!).

We’ll dive deeper into how to test AI, along with a data review toolkit in a future chapter. First, we’ll look at the biggest mistake executives make when investing in AI.